AlphaZero Crushes Stockfish In New 1,000-Game Match

In news reminiscent of the initial AlphaZero shockwave last December, the artificial intelligence company DeepMind released astounding results from an updated version of the machine-learning chess project today.

The results leave no question, once again, that AlphaZero plays some of the strongest chess in the world.

The updated AlphaZero crushed Stockfish 8 in a new 1,000-game match, scoring +155 -6 =839. (See below for three sample games from this match with analysis by Stockfish 10 and video analysis by GM Robert Hess.)

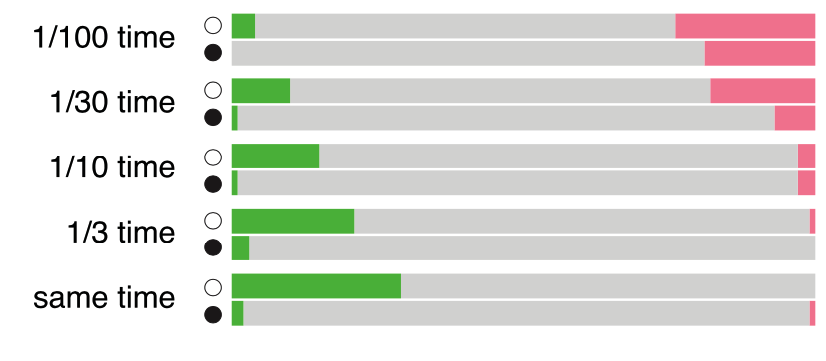

AlphaZero also bested Stockfish in a series of time-odds matches, soundly beating the traditional engine even at time odds of 10 to one.

In additional matches, the new AlphaZero beat the "latest development version" of Stockfish, with virtually identical results as the match vs Stockfish 8, according to DeepMind. The pre-release copy of journal article, which is dated Dec. 7, 2018, does not specify the exact development version used.

[Update: Today's release of the full journal article specifies that the match was against the latest development version of Stockfish as of Jan. 13, 2018, which was Stockfish 9.]

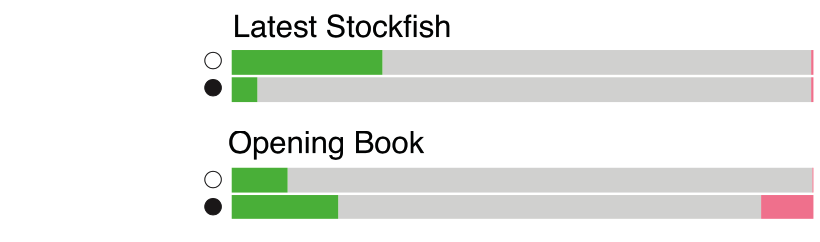

The machine-learning engine also won all matches against "a variant of Stockfish that uses a strong opening book," according to DeepMind. Adding the opening book did seem to help Stockfish, which finally won a substantial number of games when AlphaZero was Black—but not enough to win the match.

AlphaZero's results (wins green, losses red) vs the latest Stockfish and vs Stockfish with a strong opening book. Image by DeepMind via Science.

The results will be published in an upcoming article by DeepMind researchers in the journal Science and were provided to selected chess media by DeepMind, which is based in London and owned by Alphabet, the parent company of Google.

The 1,000-game match was played in early 2018. In the match, both AlphaZero and Stockfish were given three hours each game plus a 15-second increment per move. This time control would seem to make obsolete one of the biggest arguments against the impact of last year's match, namely that the 2017 time control of one minute per move played to Stockfish's disadvantage.

With three hours plus the 15-second increment, no such argument can be made, as that is an enormous amount of playing time for any computer engine. In the time odds games, AlphaZero was dominant up to 10-to-1 odds. Stockfish only began to outscore AlphaZero when the odds reached 30-to-1.

AlphaZero's results (wins green, losses red) vs Stockfish 8 in time odds matches. Image by DeepMind via Science.

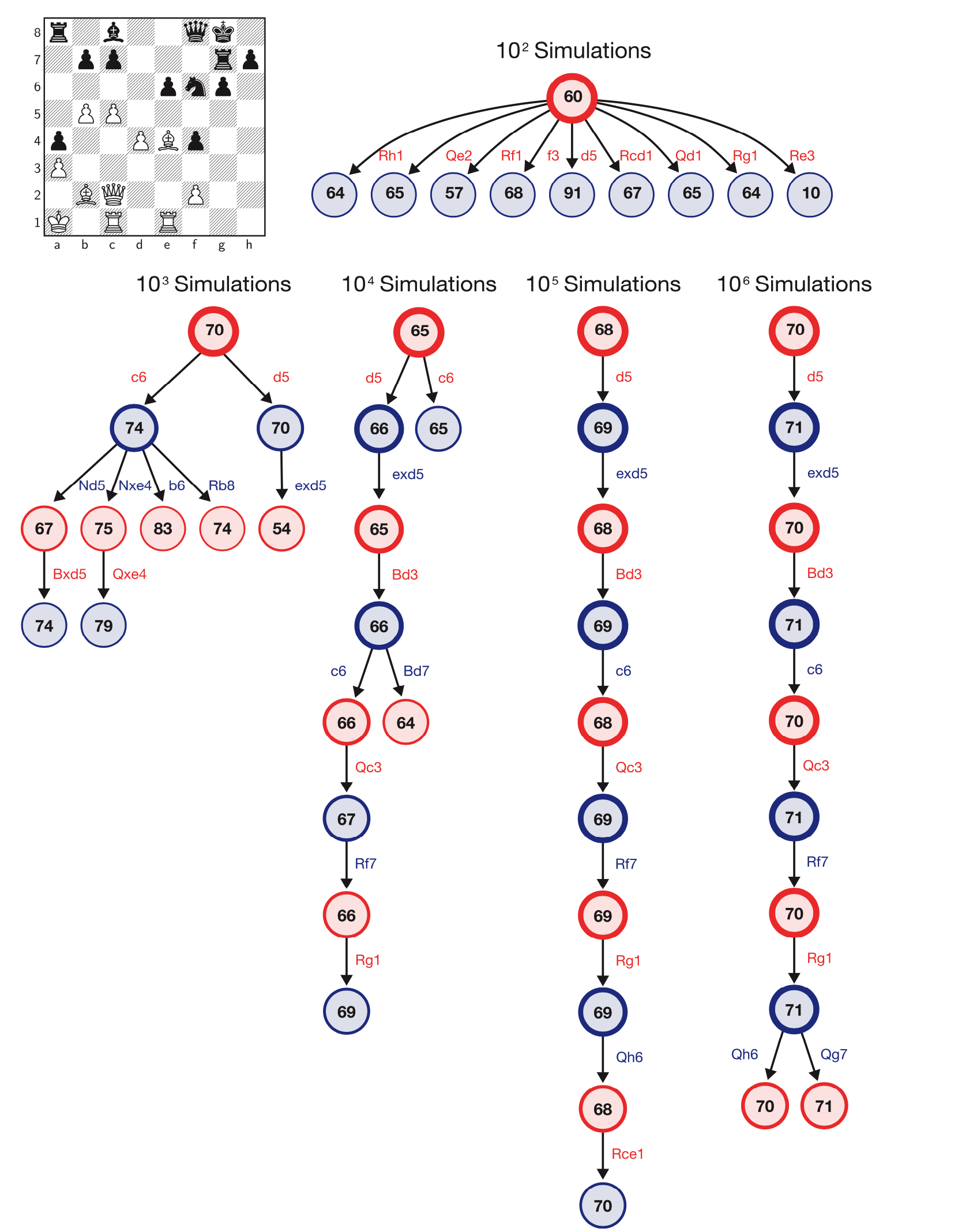

AlphaZero's results in the time odds matches suggest it is not only much stronger than any traditional chess engine, but that it also uses a much more efficient search for moves. According to DeepMind, AlphaZero uses a Monte Carlo tree search, and examines about 60,000 positions per second, compared to 60 million for Stockfish.

An illustration of how AlphaZero searches for chess moves. Image by DeepMind via Science.

What can computer chess fans conclude after reading these results? AlphaZero has solidified its status as one of the elite chess players in the world. But the results are even more intriguing if you're following the ability of artificial intelligence to master general gameplay.

According to the journal article, the updated AlphaZero algorithm is identical in three challenging games: chess, shogi, and go. This version of AlphaZero was able to beat the top computer players of all three games after just a few hours of self-training, starting from just the basic rules of the games.

The updated AlphaZero results come exactly one year to the day since DeepMind unveiled the first, historic AlphaZero results in a surprise match vs Stockfish that changed chess forever.

Since then, an open-source project called Lc0 has attempted to replicate the success of AlphaZero, and the project has fascinated chess fans. Lc0 now competes along with the champion Stockfish and the rest of the world's top engines in the ongoing Chess.com Computer Chess Championship.

CCC fans will be pleased to see that some of the new AlphaZero games include "fawn pawns," the CCC-chat nickname for lone advanced pawns that cramp an opponent's position. Perhaps the establishment of these pawns is a critical winning strategy, as it seems AlphaZero and Lc0 have independently learned it.

DeepMind released 20 sample games chosen by GM Matthew Sadler from the 1,000 game match. Chess.com has selected three of these games with deep analysis by Stockfish 10 and video analysis by GM Robert Hess. You can download the 20 sample games at the bottom of this article, analyzed by Stockfish 10, and four sample games analyzed by Lc0.

Update: After this article was published, DeepMind released 210 sample games that you can download here.

We are also releasing 210 new chess games - including a top 20 selected by GM Matthew Sadler @gmmds - that show off its dynamic playing style and we hope will inspire chess players of all levels around the world. https://t.co/ZJDoaon5z0

— DeepMind (@DeepMindAI) December 6, 2018

Selected game 1 with analysis by Stockfish 10:

Selected game 2 with analysis by Stockfish 10:

Game 2 video analysis by GM Robert Hess:

Selected game 3 with analysis by Stockfish 10:

Game 3 video analysis by GM Robert Hess:

IM Anna Rudolf also made a video analysis of one of the sample games, calling it "AlphaZero's brilliancy."

The new version of AlphaZero trained itself to play chess starting just from the rules of the game, using machine-learning techniques to continually update its neural networks. According to DeepMind, 5,000 TPUs (Google's tensor processing unit, an application-specific integrated circuit for article intelligence) were used to generate the first set of self-play games, and then 16 TPUs were used to train the neural networks.

The total training time in chess was nine hours from scratch. According to DeepMind, it took the new AlphaZero just four hours of training to surpass Stockfish; by nine hours it was far ahead of the world-champion engine.

For the games themselves, Stockfish used 44 CPU (central processing unit) cores and AlphaZero used a single machine with four TPUs and 44 CPU cores. Stockfish had a hash size of 32GB and used syzygy endgame tablebases.

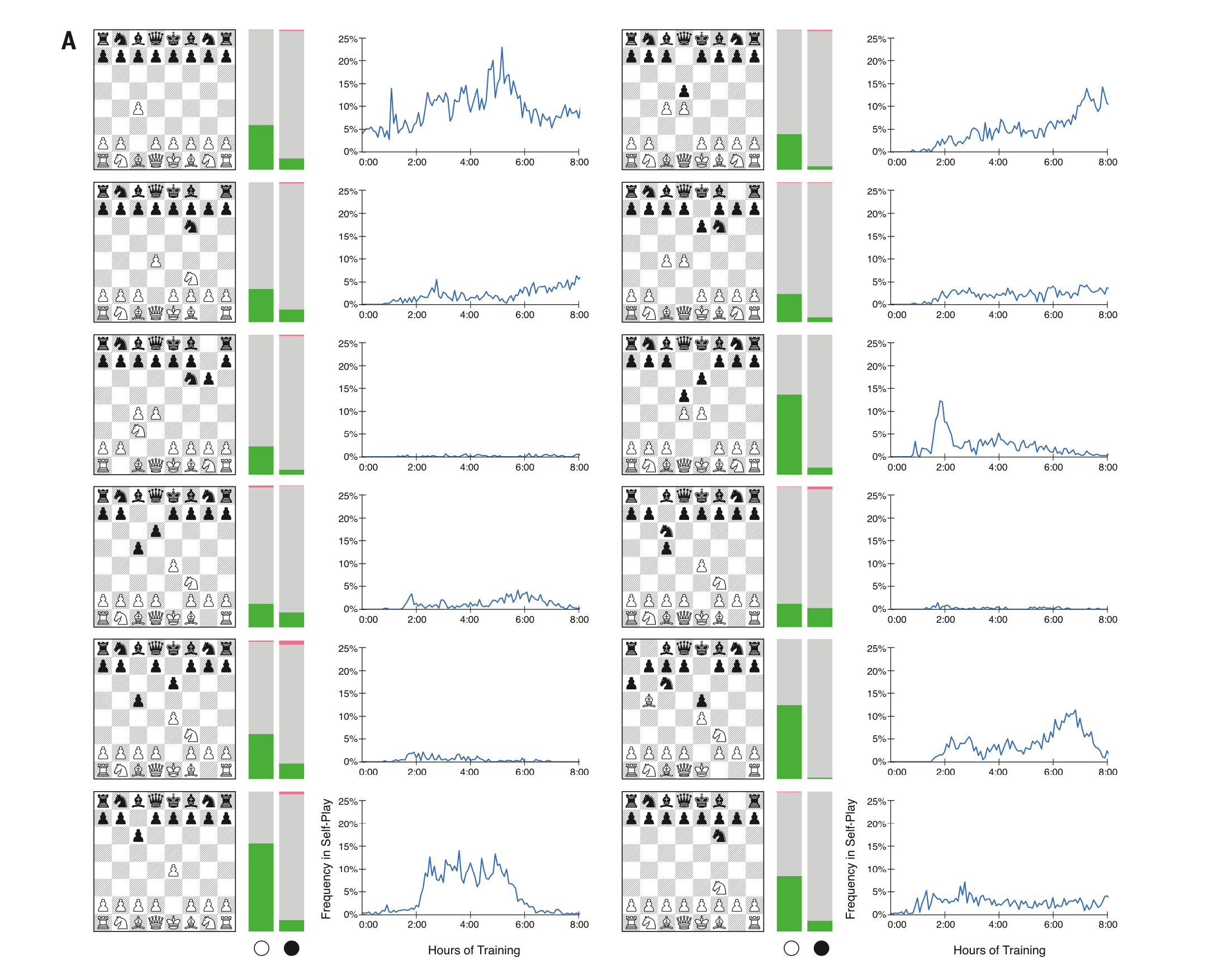

AlphaZero's results vs. Stockfish in the most popular human openings. In the left bar, AlphaZero plays White; in the right bar, AlphaZero is Black. Image by DeepMind via Science. Click on the image for a larger version.

The sample games released were deemed impressive by chess professionals who were given preview access to them. GM Robert Hess categorized the games as "immensely complicated."

DeepMind itself noted the unique style of its creation in the journal article:

"In several games, AlphaZero sacrificed pieces for long-term strategic advantage, suggesting that it has a more fluid, context-dependent positional evaluation than the rule-based evaluations used by previous chess programs," the DeepMind researchers said.

The AI company also emphasized the importance of using the same AlphaZero version in three different games, touting it as a breakthrough in overall game-playing intelligence:

"These results bring us a step closer to fulfilling a longstanding ambition of artificial intelligence: a general game-playing system that can learn to master any game," the DeepMind researchers said.

I couldn't help but be pleased that AlphaZero plays in open, dynamic style. It's not just my style, but it's not the incomprehensible maneuvering we feared computer chess would become. My @sciencemagazine article: https://t.co/ftcKzYTsw0 https://t.co/85h44ebCrS

— Garry Kasparov (@Kasparov63) December 6, 2018

You can download the 20 sample games provided by DeepMind and analyzed by Chess.com using Stockfish 10 on a powerful computer. The first set of games contains 10 games with no opening book, and the second set contains games with openings from the 2016 TCEC (Top Chess Engine Championship).

20 games with analysis by Stockfish 10:

4 selected games with analysis by Lc0:

Love AlphaZero? You can watch the machine-learning chess project it inspired, Lc0, in the ongoing Computer Chess Championship now.